CSIT_Labs

Artificial Intelligence

Programs

- Breadth First Search

- Depth First Search

- Iterative Deepening Depth First Search

- Greedy Best First Search

- A* Search

- n Queens Problem

- Water Jug Problem

- Tower of Hanoi

- Alpha Beta search

- Hill Climbing

- Tic Tac Toe

- Constraint Satisfaction Problem

- Perceptron algorithm for NAND logic gate

Theory

- Breadth First Search

- Depth First Search

- Iterative Deepening Depth First Search

- Greedy Best First Search

- A* Search

- n Queens Problem

- Water Jug Problem

- Tower of Hanoi

- Alpha Beta pruning

- Hill Climbing

- Tic Tac Toe

- Constraint Satisfaction Problem

- Perceptron algorithm for NAND logic gate

Breadth First Search

Breadth-first search (BFS) is an algorithm for traversing or searching tree or graph data structures. It starts at the tree root (or some arbitrary node of a graph, sometimes referred to as a ‘search key’[1]), and explores all of the neighbor nodes at the present depth prior to moving on to the nodes at the next depth level.

Algorithm:

- Create a queue and enqueue source into it. Mark source as visited.

- While queue is not empty, do following

- Dequeue a vertex from queue. Let this be u.

- If u is the destination, then return true.

- Else, continue following steps

- Enqueue all adjacent vertices of u which are not yet visited.

- Mark them visited and continue from step 2.

Depth First Search

Depth-first search (DFS) is an algorithm for traversing or searching tree or graph data structures. The algorithm starts at the root node (selecting some arbitrary node as the root node in the case of a graph) and explores as far as possible along each branch before backtracking.

Algorithm:

- Create a stack and push source into it. Mark source as visited.

- While stack is not empty, do following

- Pop a vertex from stack. Let this be u.

- If u is the destination, then return true.

- Else, continue following steps

- Push all adjacent vertices of u which are not yet visited.

- Mark them visited and continue from step 2.

Iterative Deepening Depth First Search

Iterative deepening depth-first search (IDDFS) is an extension to the ‘vanilla’ depth-first search algorithm, with an added constraint on the total depth explored per iteration. IDDFS is optimal like breadth-first search, but uses much less memory; at each iteration, it visits the nodes in the search tree in the same order as depth-first search, but the cumulative order in which nodes are first visited is effectively breadth-first.

Algorithm:

- Perform a depth-first search to depth 1, starting from the root.

- Provided it didn’t find the goal, perform a depth-first search to depth 2, starting from the root.

- Provided it didn’t find the goal, perform a depth-first search to depth 3, etc., etc.

Greedy Best First Search

Greedy best-first search is a best-first search that uses heuristics to improve speed. It expands the most promising node chosen according to the heuristic function. Greedy best-first search algorithm is a search algorithm which is used to solve many problems among them the most common one is the 8-puzzle. It is an informed search algorithm as it uses information about path cost and also uses heuristics to find the solution. It is a best-first search algorithm in which the cost associated with a node is f(n) = h(n). It expands the node that is estimated to be closest to the goal. It is not optimal as it does not guarantee the shortest path. It is complete as it will always find a solution if one exists. It is faster than breadth-first search but slower than A* search.

Algorithm:

- Create a priority queue and enqueue source into it. Mark source as visited.

- While queue is not empty, do following

- Dequeue a vertex from queue. Let this be u.

- If u is the destination, then return true.

- Else, continue following steps

- Enqueue all adjacent vertices of u which are not yet visited.

- Mark them visited and continue from step 2.

A* Search

A* is the most popular choice for pathfinding, because it’s fairly flexible and can be used in a wide range of contexts. A* is like Dijkstra’s Algorithm in that it can be used to find a shortest path. A* is like Greedy Best-First-Search in that it can use a heuristic to guide itself.

Algorithm:

- Create a priority queue and enqueue source into it. Mark source as visited.

- While queue is not empty, do following

- Dequeue a vertex from queue. Let this be u.

- If u is the destination, then return true.

- Else, continue following steps

- Enqueue all adjacent vertices of u which are not yet visited.

- Mark them visited and continue from step 2.

n Queens Problem

The n-queens puzzle is the problem of placing n queens on an n×n chessboard such that no two queens attack each other. Given an integer n, print all distinct solutions to the n-queens puzzle. Each solution contains distinct board configurations of the n-queens’ placement, where the solutions are a permutation of [1,2,3..n] in increasing order, here the number in the ith place denotes that the ith-column queen is placed in the row with that number. For eg below figure represents a chessboard [3 1 4 2].

Algorithm:

- Start in the leftmost column

- If all queens are placed

- return true

- Try all rows in the current column. Do following for every tried row.

- If the queen can be placed safely in this row then mark this [row, column] as part of the solution and recursively check if placing queen here leads to a solution.

- If placing the queen in [row, column] leads to a solution then return true.

- If placing queen doesn’t lead to a solution then unmark this row, column and go to step (a) to try other rows.

- If all rows have been tried and nothing worked, return false to trigger backtracking.

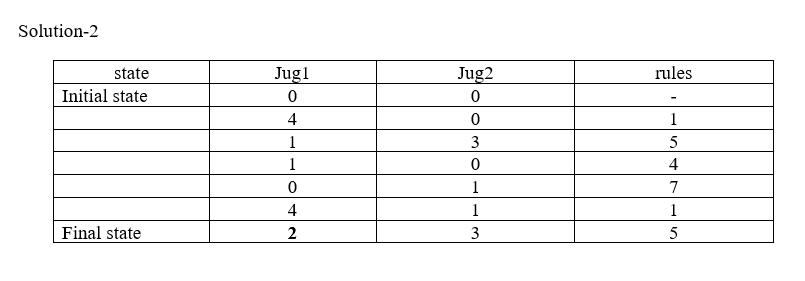

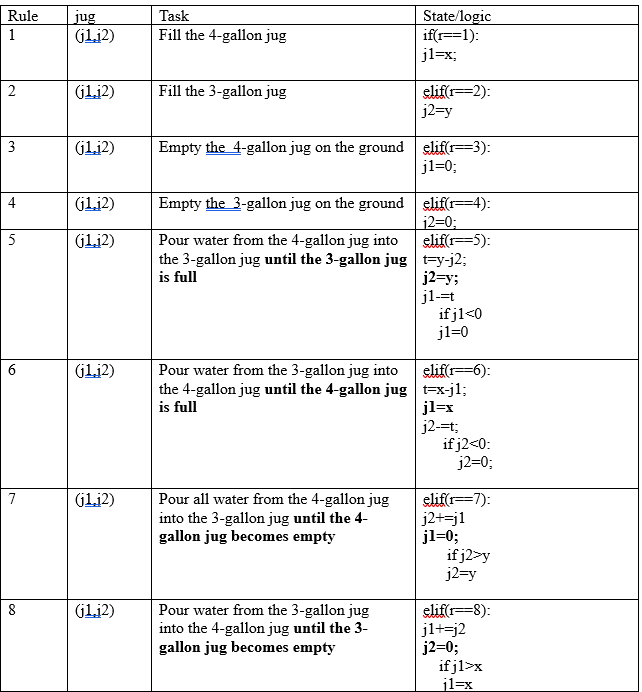

Water Jug Problem

- J1 and j2 are two jugs

- (x, y) : order pair

- x: maximum water storage capacity of jug1 is 4 gallons i.e. x=4

- y: maximum water storage capacity of jug2 is 3 gallons i.e. y=3

- No mark on jug

- Pump to fill the water into the jug

- How can you get exactly 2 gallon of water into the 4-gallons jug?

Solution:

Tower of Hanoi

The Tower of Hanoi is a mathematical game or puzzle. It consists of three rods and a number of disks of different sizes, which can slide onto any rod. The puzzle starts with the disks stacked on one rod in order of decreasing size, the smallest at the top, thus approximating a conical shape. The objective of the puzzle is to move the entire stack to another rod, obeying the following simple rules:

- Only one disk can be moved at a time.

- Each move consists of taking the upper disk from one of the stacks and placing it on top of another stack or on an empty rod.

- No larger disk may be placed on top of a smaller disk.

Algorithm:

- Move top n-1 disks from source to auxiliary tower.

- Move the nth disk from source to destination tower.

- Move the n-1 disks from auxiliary tower to destination tower.

Alpha Beta Pruning

Alpha–beta pruning is a search algorithm that seeks to decrease the number of nodes that are evaluated by the minimax algorithm in its search tree. It is an adversarial search algorithm used commonly for machine playing of two-player games (Tic-tac-toe, Chess, Go, etc.). It stops evaluating a move when at least one possibility has been found that proves the move to be worse than a previously examined move. Such moves need not be evaluated further. When applied to a standard minimax tree, it returns the same move as minimax would, but prunes away branches that cannot possibly influence the final decision.

Algorithm:

- Initialize alpha with -INFINITY and beta with +INFINITY.

- Maximizer and Minimizer are two players.

- Maximizer makes a move and calls Minimizer.

- Minimizer makes a move and calls Maximizer.

- Repeat the above two steps till any one of the player wins the game or the game ends.

- At each level, compare the value of alpha and beta.

- If alpha is greater than or equal to beta, stop further evaluation and return alpha.

- Else, return beta.

Hill climbing algorithm

Hill climbing algorithm is a local search algorithm which continuously moves in the direction of increasing elevation/value to find the peak of the mountain or best solution to the problem. It terminates when it reaches a peak value where no neighbor has a higher value. Hill climbing algorithm is a technique which is used for optimizing the mathematical problems. One of the widely discussed examples of Hill climbing algorithm is Traveling-salesman Problem in which we need to minimize the distance traveled by the salesman. It is also called greedy local search as it only looks to its good immediate neighbor state and not beyond that. A node of hill climbing algorithm has two components which are state and value. Hill Climbing is mostly used when a good heuristic is available. In this algorithm, we don’t need to maintain and handle the search tree or graph as it only keeps a single current state.

Algorithm:

- Evaluate the initial state, if it is goal state then return success and Stop.

- Loop Until a solution is found or there is no new operator left to apply.

- Select and apply an operator to the current state.

- Check new state:

- If it is goal state, then return success and quit.

- Else if it is better than the current state then assign new state as a current state.

- Else if not better than the current state, then return to step2.

- Exit.

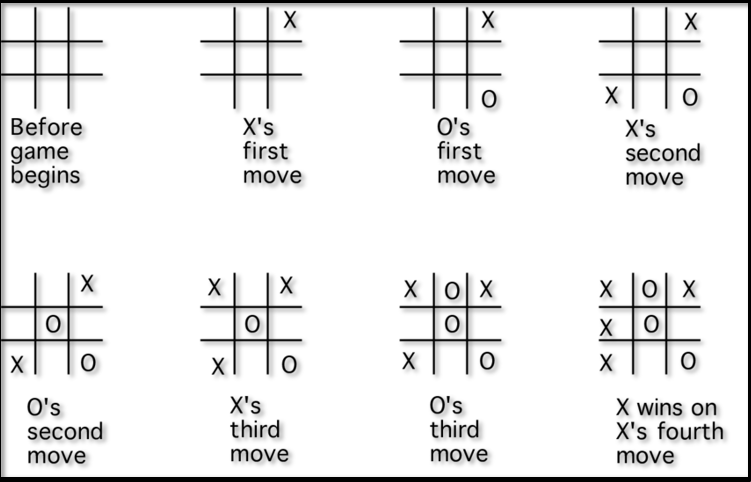

Tic-Tac-Toe

Tic-tac-toe is a game played by two players on a 3x3 grid. In this game, the first player marks moves with a circle, the second with a cross. The player who has formed a horizontal, vertical, or diag-onal sequence of three marks wins. Your task is to write a program that determines the status of a tic-tac-toe game.

Algorithm:

- Create a 3x3 grid with all values 0.

- Take input from user for row and column.

- Check if the position is already filled or not.

- If not filled, then check if it is player 1 or player 2.

- If player 1, then fill the position with 1.

- If player 2, then fill the position with 2.

- Check if any player has won the game or not.

- If yes, then print the winner and exit.

- If no, then continue from step 2.

Game Rules:

- Traditionally the first player plays with “X”. So you can decide who wants to go with “X” and who wants go with “O”.

- Only one player can play at a time.

- If any of the players have filled a square then the other player and the same player cannot override that square.

- There are only two conditions that may match will be draw or may win.

- The player that succeeds in placing three respective marks (X or O) in a horizontal, vertical or diagonal row wins the game.

Constraint Satisfaction Problem

Constraint satisfaction problems (CSPs) are mathematical questions defined as a set of objects whose state must satisfy a number of constraints or limitations. CSPs represent the entities in a problem as a homogeneous collection of finite constraints over variables, which is solved by constraint satisfaction methods. CSPs are the subject of intense research in both artificial intelligence and operations research, since the regularity in their formulation provides a common basis to analyze and solve problems of many seemingly unrelated families. CSPs often exhibit high complexity, requiring a combination of heuristics and combinatorial search methods to be solved in a reasonable time. CSPs are applied in many areas including scheduling, planning, vehicle routing, configuration, and the design of communication networks.

Algorithm:

- Initialize all the variables with their domain values.

- Select a variable and assign it a value from its domain.

- Check if the assignment is consistent with the constraints.

- If yes, then select another variable and assign it a value from its domain.

- If no, then backtrack and select another value from the domain of the previous variable.

- Repeat steps 3 to 5 until all the variables are assigned with a value from their domain.

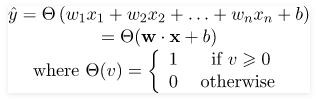

NAND using Perceptron

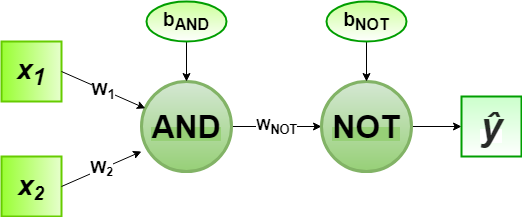

A perceptron is a single layer neural network. It is the simplest neural network model. It consists of a single layer of one or more perceptrons (neurons).

In the field of Machine Learning, the Perceptron is a Supervised Learning Algorithm for binary classifiers. The Perceptron Model implements the following function:

For a particular choice of the weight vector x and bias parameter b, the model predicts output y(cap) for the corresponding input vector x.

NAND logical function truth table for 2-bit binary variables, i.e, the input vector x: (x1,x2) and the corresponding output y –

| x1 | x2 | y |

|---|---|---|

| 0 | 0 | 1 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1 | 1 | 0 |

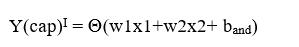

We can observe that, NAND(x1,x2) = NOT (AND (x1,x2)) Now for the corresponding weight vector w: (w1,w2) of the input vector x: (x1,x2) to the AND node, the associated Perceptron Function can be defined as:

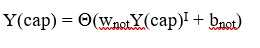

Later on, the output of AND node Y(cap)I is the input to the NOT node with weight wnot. Then the corresponding output Y(cap) is the final output of the NAND logic function and the associated Perceptron Function can be defined as:

For the implementation, considered weight parameters are w1 = 1, w2 = 1, wnot = -1 and the bias parameters are band=-1.5, bnot = 0.5.

For the implementation, considered weight parameters are w1 = 1, w2 = 1, wnot = -1 and the bias parameters are band=-1.5, bnot = 0.5.

Algorithm:

- Initialize the weights and bias with random values.

- Take inputs from the user.

- Calculate the weighted sum of inputs and weights.

- Pass the weighted sum through the activation function.

- Calculate the error.

- Update the weights and bias.

- Repeat steps 3 to 6 until the error is 0.